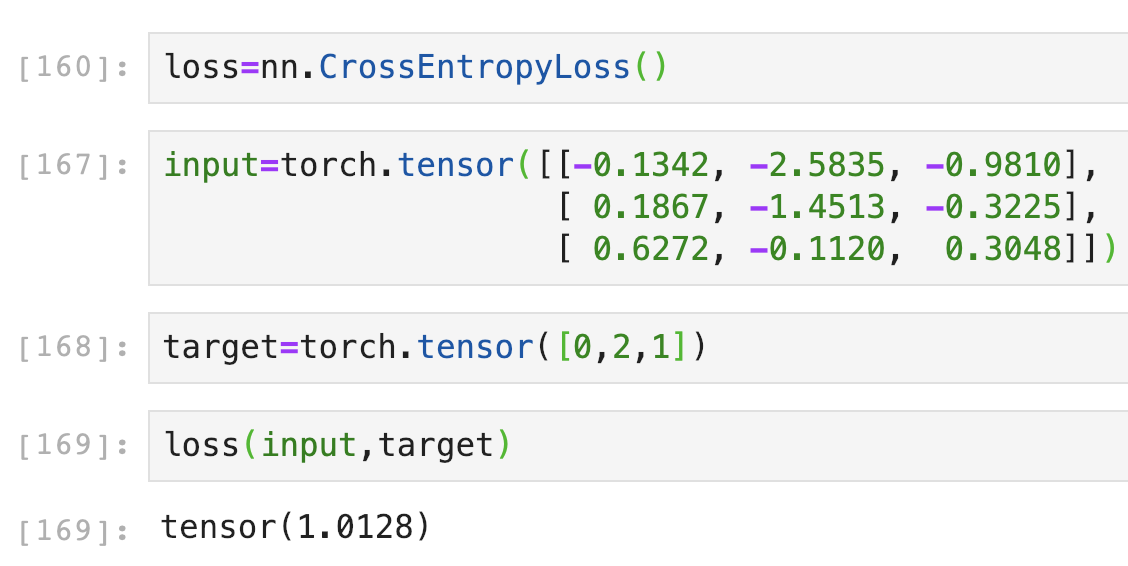

parameters (), lr = learning_rate ) ''' STEP 7: TRAIN THE MODEL ''' iter = 0 for epoch in range ( num_epochs ): for i, ( images, labels ) in enumerate ( train_loader ): # Load images as Variable images = images. CrossEntropyLoss () ''' STEP 6: INSTANTIATE OPTIMIZER CLASS ''' learning_rate = 0.1 optimizer = torch. fc2 ( out ) return out ''' STEP 4: INSTANTIATE MODEL CLASS ''' input_dim = 28 * 28 hidden_dim = 100 output_dim = 10 model = FeedforwardNeuralNetModel ( input_dim, hidden_dim, output_dim ) ''' STEP 5: INSTANTIATE LOSS CLASS ''' criterion = nn.

relu ( out ) # Linear function (readout) out = self. Linear ( hidden_dim, output_dim ) def forward ( self, x ): # Linear function out = self. ReLU () # Linear function (readout) self. Linear ( input_dim, hidden_dim ) # Non-linearity self. Module ): def _init_ ( self, input_dim, hidden_dim, output_dim ): super ( FeedforwardNeuralNetModel, self ). DataLoader ( dataset = test_dataset, batch_size = batch_size, shuffle = False ) ''' STEP 3: CREATE MODEL CLASS ''' class FeedforwardNeuralNetModel ( nn. DataLoader ( dataset = train_dataset, batch_size = batch_size, shuffle = True ) test_loader = torch. ToTensor ()) ''' STEP 2: MAKING DATASET ITERABLE ''' batch_size = 100 n_iters = 3000 num_epochs = n_iters / ( len ( train_dataset ) / batch_size ) num_epochs = int ( num_epochs ) train_loader = torch. MNIST ( root = './data', train = False, transform = transforms.

Pytorch cross entropy loss download#

ToTensor (), download = True ) test_dataset = dsets. MNIST ( root = './data', train = True, transform = transforms. manual_seed ( 0 ) ''' STEP 1: LOADING DATASET ''' train_dataset = dsets. Import torch import torch.nn as nn import ansforms as transforms import torchvision.datasets as dsets # Set seed torch. NVIDIA Inception Partner Status, Singapore, May 2017 NVIDIA Self Driving Cars & Healthcare Talk, Singapore, June 2017 NUS-MIT-NUHS NVIDIA Image Recognition Workshop, Singapore, July 2018 Recap of Facebook PyTorch Developer Conference, San Francisco, September 2018įacebook PyTorch Developer Conference, San Francisco, September 2018 NExT++ AI in Healthcare and Finance, Nanjing, November 2018 IT Youth Leader of The Year 2019, March 2019ĪMMI (AIMS) supported by Facebook and Google, November 2018 Oral Presentation for AI for Social Good Workshop ICML, June 2019 Markov Decision Processes (MDP) and Bellman Equationsįractional Differencing with GPU (GFD), DBS and NVIDIA, September 2019ĭeep Learning Introduction, Defence and Science Technology Agency (DSTA) and NVIDIA, June 2019 Supervised Learning to Reinforcement Learning (RL) Weight Initialization and Activation Functions Summary of Optimization Algorithms Performance Optimization Algorithm 3: Mini-batch Gradient Descent Optimization Algorithm 2: Stochastic Gradient Descent Optimization Algorithm 1: Batch Gradient Descent Mathematical Interpretation of Gradient Descent Model Recap: 1 Hidden Layer Feedforward Neural Network (ReLU Activation) Introduction to Gradient-descent Optimizers

Long Short Term Memory Neural Networks (LSTM)įully-connected Overcomplete Autoencoder (AE)įorward- and Backward-propagation and Gradient Descent (From Scratch FNN Regression)įrom Scratch Logistic Regression Classification

0 kommentar(er)

0 kommentar(er)